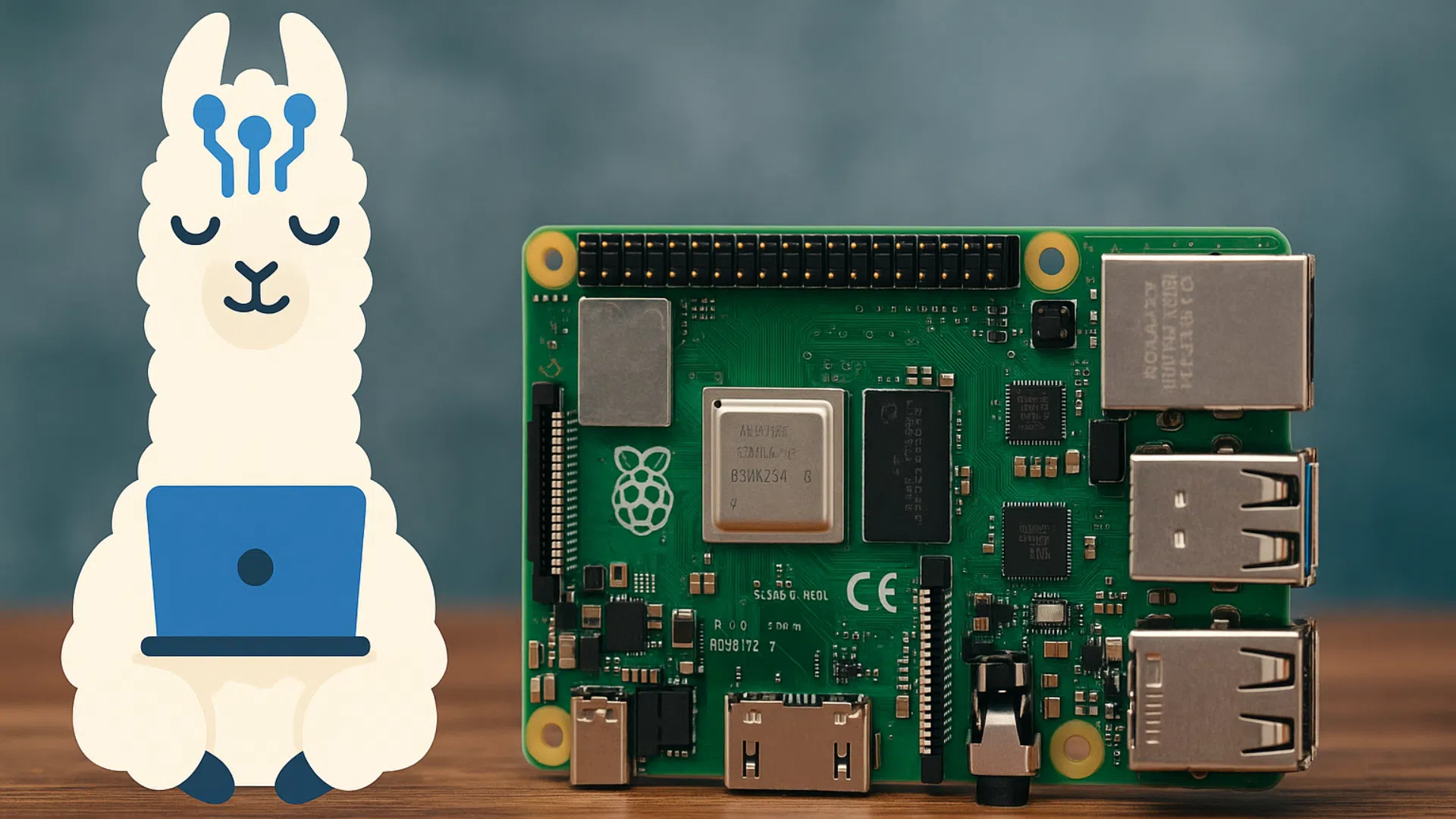

Ollama on Raspberry Pi: artificial intelligence in your own home

Introduction

Artificial intelligence is no longer the preserve of digital giants. Today, it's possible to run advanced language models directly locallywithout connection to remote servers.

This is exactly what Ollamaan open source solution for running AI models such as LLaMA 2, Mistral or Vicuna on a personal computer.

And the good news? With a little creativity, it's possible to use Ollama on Raspberry Pi. An affordable way to turn that little computer into a personal AI mini-lab.

What is Ollama?

Ollama is a language model runtime (LLM) which facilitates the deployment and use of AI models locally.

Its strengths:

- Local execution → no need for a cloud.

- Multi-model compatibility LLaMA 2, Mistral, Falcon, Gemma, Vicuna...

- Simple interface clear command line and REST API for developers.

- Privacy your data never leaves your machine.

In short, Ollama democratizes access to advanced AI by enabling anyone to install it at home.

Why would you want Ollama on Raspberry Pi?

1. Local AI at lower cost

A Raspberry Pi 5 costs much less than a high-end PC. Even if its resources are limited, it can be used as a experimental AI server to discover Ollama.

2. Confidentiality and control

With Ollama, everything stays local. On a Raspberry Pi, you have a autonomous AI solution which does not depend on any external service.

3. Accessibility

A Pi is compact, quiet and energy-efficient. It can run permanently as a personal mini-server.

4. For learning and experimenting

Running Ollama on a Pi gives you a better understanding of how LLMs work, their limitations and their uses, without investing in an expensive machine.

Limits to keep in mind

Let's face it: the Raspberry Pi, even in version 5, doesn't have the raw power of a PC with a dedicated graphics card.

Main constraints :

- Limited RAM 4 to 8 GB (maximum 16 GB on certain editions). However, LLM models often require dozens of GB.

- No native GPU acceleration The Pi 5 relies mainly on its ARM CPU, which limits performance.

- Longer response times Running a large model (even a lightweight one) on the Pi requires a lot of latency.

👉 Clearly, the Raspberry Pi won't replace a high-end AI station. But for testing, hosting a lightweight model or developing educational projects, it's perfect.

How can Ollama be used on Raspberry Pi?

Personal chatbot

Installing Ollama on the Pi allows you to create a personal assistant accessible from a browser or via API.

Home AI server

We could connect Ollama to a home automation system (Home Assistant, Node-RED) to interact in natural language with your connected home.

Educational laboratory

A Raspberry Pi with Ollama is an excellent tool for learn AI fine-tuning small models, testing prompts, integrating into apps.

Local server for developers

Programmers can use the Pi as a AI backendfor example, to generate text, summarize notes or test LLM applications.

Which models to use on Raspberry Pi?

Given the Pi's limited resources, it's best to turn to :

- Optimized / quantified (reduced size, e.g. Q4 or Q5).

- Small models (<3B parameters) such as :

- LLaMA 2-7B quantised (high limit).

- Mistral 7B lightened.

- TinyLlama or GPT4All-J (more suitable).

👉 Objective: a compromise between speed and quality of response.

Raspberry Pi 5: a good candidate for Ollama

Compared to previous versions, the Pi 5 offers a real leap in power:

- Faster CPU (ARM Cortex-A76 2.4 GHz).

- Up to 8 GB RAM (or even more on some versions).

- NVMe SSD support for storing large models.

This opens the door to AI experiments realistic but modest.

Advantages and disadvantages

✅ Benefits

- Solution inexpensive to test Ollama.

- Compact and silent (ideal as a back-up server).

- Learn and experiment with AI locally.

- Respect for privacy data.

❌ Disadvantages

- Limited power (high latency).

- Does not support very large models (>7B).

- Not suitable for intensive professional use.

Conclusion

The installation ofOllama on Raspberry Pi is not a choice for raw performance. It's not a solution for replacing a PC with a GPU, but rather a accessible gateway to local AI.

With a Raspberry Pi 5 and lighter models, it's now possible to create your own personal artificial intelligence mini-server We've developed a range of solutions to meet your needs: a local chatbot, a home automation assistant, or a learning lab.

👉 If you are curious, maker or developerOllama on Raspberry Pi is an excellent way to explore AI in a different way: more local, more private and more accessible.